The Art and Science of Capturing Impulse Responses for Convolutional Reverb

In the world of audio production, few tools are as transformative as convolution reverb. Unlike algorithmic reverbs that simulate spaces through mathematical models, convolution reverb relies on actual acoustic snapshots called impulse responses (IRs). These IRs serve as sonic fingerprints of real-world environments, allowing producers to place sounds in anything from grand cathedrals to intimate studios with uncanny realism. The process of capturing these impulse responses, however, is both an art and a science that demands precision, patience, and a deep understanding of acoustics.

The Pulse of the Matter

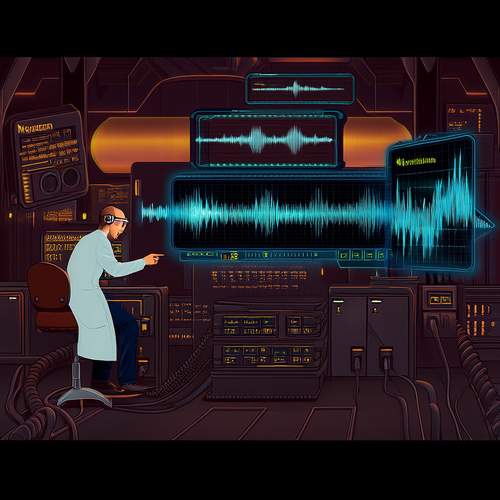

At its core, an impulse response represents how a space reacts to an instantaneous sound – the audio equivalent of throwing a pebble into a pond and mapping every ripple. In theory, the perfect impulse would be infinitely short and infinitely loud, containing all frequencies equally. In practice, audio engineers use starter pistols, balloon pops, or specialized exponential sine sweeps to approximate this ideal. The resulting recording captures not just the reverb tail, but the complete sonic character of the space, including early reflections, frequency absorption, and even nonlinearities from the environment.

Modern IR capture techniques have evolved significantly from early methods that relied on literal gunshots in concert halls. Today's professionals often use sophisticated sine sweep excitations that offer superior signal-to-noise ratio compared to impulsive sounds. By playing a tone that sweeps from 20Hz to 20kHz over several seconds, then deconvolving this recording mathematically, engineers can extract an impulse response with remarkable accuracy. This method effectively separates the test signal from the room's characteristics while minimizing noise interference.

The Spatial Equation

Location selection for IR capture involves more than finding acoustically interesting spaces. Engineers must consider practical factors like ambient noise floors, temperature stability (which affects sound speed), and even air humidity (which absorbs high frequencies over distance). Legendary scoring stages like Abbey Road Studio 1 or the Sony Pictures scoring stage didn't become convolution reverb staples by accident – their carefully designed acoustics translate exceptionally well to the IR format.

Microphone placement during capture creates another layer of complexity. While stereo pairs are common, multi-array configurations using ambisonic or binaural setups can capture fully three-dimensional reverberation. Some engineers employ "golden ears" techniques – moving mics incrementally during sweeps to average multiple perspectives. The choice between omnidirectional and directional microphones presents another critical decision point, as it fundamentally shapes how the space's diffuse field gets represented in the final IR.

Beyond the Basics

Advanced IR capture pushes into psychoacoustic territory. Some engineers now capture "dynamic impulse responses" that account for how spaces respond differently to various input levels – crucial for accurately modeling nonlinear acoustic phenomena. Others experiment with multi-source excitation to simulate how spaces react to distributed sound sources rather than point origins. There's even growing interest in capturing "performative impulse responses" where the excitation source moves through space during the sweep, mimicking how musicians might move during a live performance.

The post-processing of raw IR captures has become equally sophisticated. While early convolution reverb libraries used raw recordings, modern IRs often undergo careful editing to remove pre-delay inconsistencies, normalize decay characteristics, or even blend multiple captures for idealized acoustic properties. Some engineers apply subtle equalization to compensate for microphone coloration, while others create hybrid IRs that merge the early reflections of one space with the reverb tail of another.

The Future Echo

As convolution technology advances, so do IR capture methodologies. Emerging techniques include laser vibrometry to capture surface vibrations as part of the impulse response, and AI-assisted deconvolution that can extract usable IRs from imperfect source material. There's also growing interest in capturing not just acoustic spaces, but the impulse responses of vintage gear – allowing convolution processors to emulate everything from classic spring reverbs to magnetic tape delay.

The democratization of IR capture tools has led to an explosion of niche reverb libraries. Where once only major studios could create professional impulse responses, today's engineers can capture spaces using nothing more than a laptop, an audio interface, and a decent microphone. This accessibility comes with challenges – the market floods with poorly captured IRs – but also opportunities, as unique spaces worldwide get documented and shared. From underground cisterns to abandoned factories, the impulse response has become audio's ultimate postcard from interesting acoustical locations.

Ultimately, the art of impulse response capture continues evolving alongside audio technology itself. As virtual reality and spatial audio grow in importance, the demand for more detailed, more immersive impulse responses will only increase. What began as a clever way to digitize reverb has become an entire subdiscipline of audio engineering – one that bridges the physical and digital worlds through the universal language of sound.

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025

By /May 30, 2025